Our Technology

State-space model networks, advanced network training, and innovative compiler technology converge to create a breakthrough in power-efficient computation. This unique approach enables AI-driven applications to achieve exceptional performance while maintaining low power consumption, redefining what’s possible in edge computing.

State Space Models

The Legendre Memory Unit (LMU), a patented invention, introduces a revolutionary class of "State Space Neural Network" models. These models are celebrated for their exceptional data efficiency in processing time series data, setting new standards in the field.

Game Changing Technology

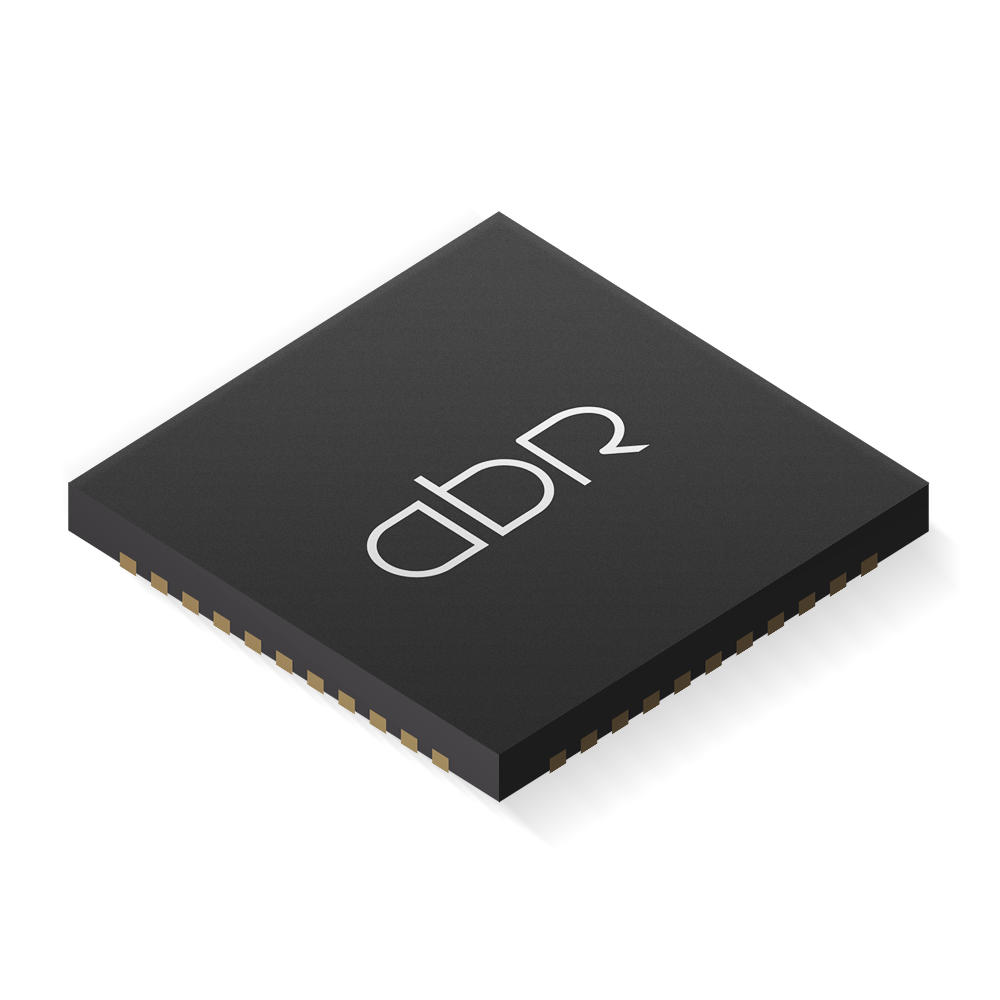

The TSP1 combines state-space neural networks, superior model scaling, custom optimized hardware, and enhanced battery management with advanced training and compiler technology. These innovations make it a leader in AI processing, delivering powerful and efficient solutions for a wide range of applications.

Powering Tomorrow's Devices

The TSP1 is designed for diverse applications, from voice interfaces and medical devices to IoT and industrial electronics. Its capabilities extend to AI infrastructure globally, driving the future of technology across multiple sectors.

First patented state-space network

"Legendre Memory Unit" is the first in a new class of state space networks that are far more computationally efficient than other popular network architectures.

State-of-the-art benchmarks

Achieving state-of-the-art benchmarks, the TSP1 demonstrates top-tier performance in AI and machine learning tasks, meeting the highest industry standards.

Data efficiency

The TSP1 chip delivers exceptional data efficiency, processing complex AI tasks with under 10mW of power. An ideal choice for energy-conscious AI applications.

Better scaling for large models

The TSP1 excels in scaling for large AI models, maintaining robust performance and speed, ideal for centering applications like deep learning and advanced analytics.

Optimized TSP hardware

The TSP1's custom optimized hardware is tailored for time series processing, delivering peak performance and efficiency, perfect for edge computing with minimal power consumption.

Natural Language Interfaces

Supporting advanced natural language interfaces, the TSP1 enables seamless interaction with AI devices, perfect for AR/VR, smart home and smart medical devices.

Get in touch